Chain of Thought Prompting: Unlock the Power of LLMs with Examples

Published on

Welcome to this comprehensive guide on Chain of Thought Prompting! If you've ever wondered how to enhance the reasoning capabilities of large language models, you're in the right place. This technique is a game-changer in the field of machine learning and natural language processing.

In this article, we'll delve deep into what Chain of Thought Prompting is, how it works, its myriad benefits, and its practical applications. We'll also explore how this technique is applied in various contexts like Langchain, ChatGPT, and Python. So, let's get started!

What is Chain of Thought Prompting?

Chain of Thought Prompting is a technique that encourages Large Language Models (LLMs) to think logically and sequentially. Unlike traditional prompting methods, CoT makes the model evaluate its reasoning at each stage, allowing it to switch to alternative methods if an error occurs. This results in more accurate and reliable outputs.

How Does It Work?

The core idea is to provide the LLM with a series of prompts that guide its thinking process. Each prompt serves as a "thought node," and the model has to evaluate the output of each node before moving on to the next. This way, the model can correct itself if it goes off track.

Implementing Chain of Thought Prompting is like building a roadmap for your language model. Here's how to do it:

- Identify the Problem: Clearly define the multi-step problem you want the language model to solve.

- Break it Down: Decompose the problem into smaller tasks or questions that lead to the final solution.

- Create Prompts: For each smaller task, create a prompt that guides the language model.

- Execute: Feed these prompts to the language model in a sequence, collecting the outputs at each step.

- Analyze and Refine: Evaluate the outputs and refine the prompts if necessary.

Example: Let's say you want to solve a math word problem about calculating the average speed of a car on a trip. Instead of asking the model to solve it directly, you could break it down into steps:

- Calculate the total distance covered.

- Calculate the total time taken.

- Calculate the average speed using the formula:

Average Speed = Total Distance / Total Time

Benefits of Using Chain of Thought Prompting

Enhanced Reasoning and Problem-Solving

One of the most compelling advantages of Chain of Thought Prompting is its ability to significantly improve the reasoning capabilities of large language models. Traditional language models often hit a wall when faced with multi-step or complex reasoning tasks. CoT effectively demolishes this wall.

- Accuracy: By breaking down a problem into smaller tasks, the model can focus on each step, leading to more accurate results.

- Complex Tasks: Whether it's solving math problems or making sense of intricate scenarios, CoT equips language models to handle them efficiently.

Chain of Thought Prompting in ChatGPT

Chain of Thought Prompting Examples

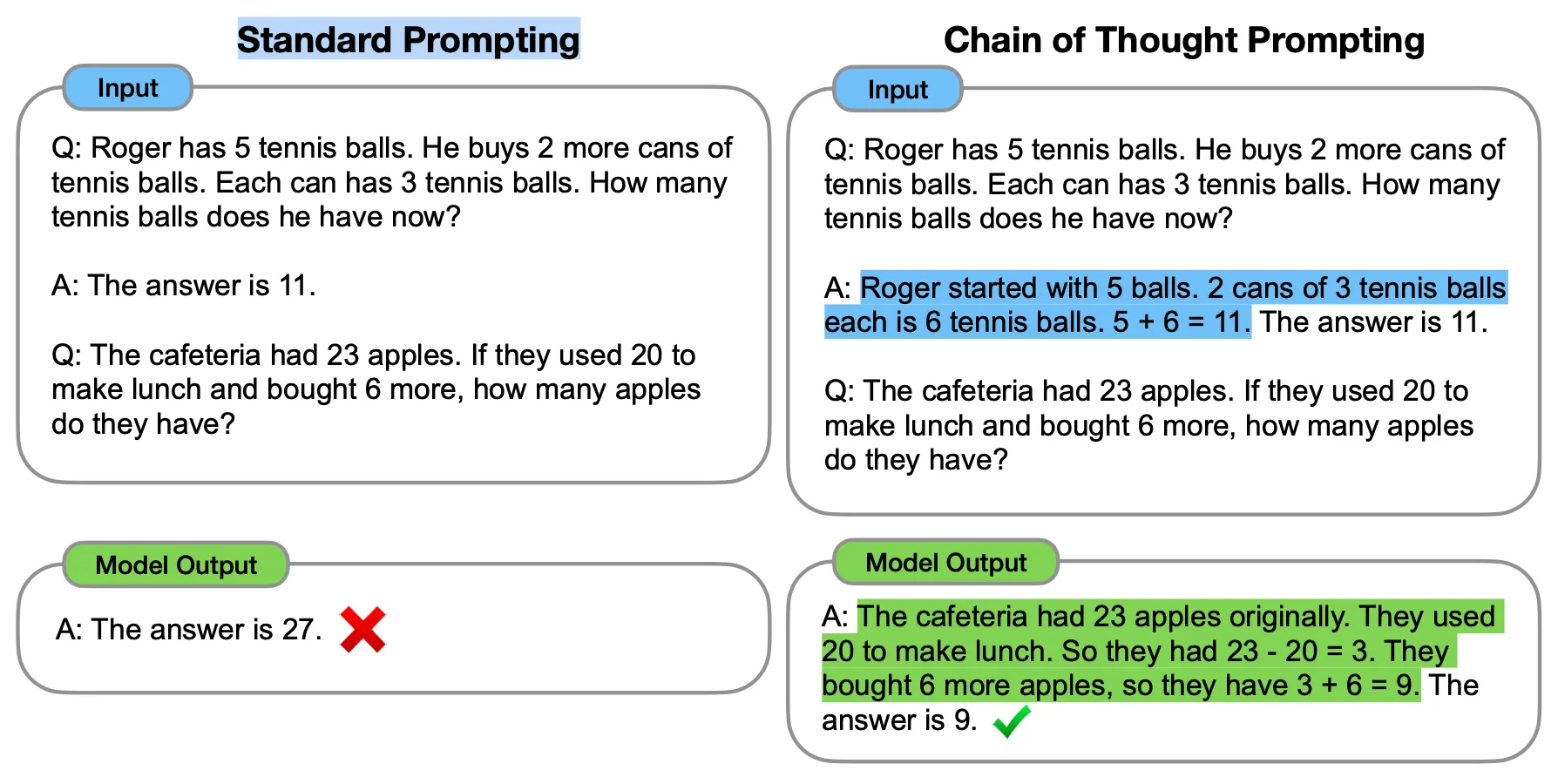

Chain of Thought Prompting, or CoT, is a cutting-edge technique designed to make Large Language Models (LLMs) like ChatGPT more articulate in their reasoning. Unlike traditional prompting methods, CoT uses a series of "few-shot exemplars" that guide the model through a logical sequence of steps. This results in more accurate and reliable outputs.

The primary idea behind CoT is to use these few-shot exemplars to explicitly show the reasoning process. When the model is prompted in this manner, it also reveals its reasoning steps, leading to more accurate and insightful answers.

Chain of Thought Prompting Benchmarks

For instance, GPT-3 (davinci-003) was tested with a simple word problem. Initially, using traditional prompting, it failed to solve the problem. However, when CoT prompting was applied, the model successfully solved the same problem. This demonstrates the effectiveness of CoT in enhancing the model's problem-solving abilities.

CoT has proven to be highly effective in enhancing the performance of LLMs in various tasks, including arithmetic, commonsense reasoning, and symbolic reasoning. Specifically, models prompted with CoT have achieved a 57% solve rate accuracy on the GSM8K benchmark, which was considered the best at the time.

!Chain of Thought Prompting Benchmarks](https://raw.githubusercontent.com/lynn-mikami/Images/main/chain-of-thought-prompting-chatgpt-3.webp (opens in a new tab))

It's worth noting that CoT is most effective when used with larger models, specifically those with around 100 billion parameters. Smaller models tend to produce illogical chains of thought, which can negatively impact the accuracy. Therefore, the performance gains from CoT are generally proportional to the size of the model.

Chain of Thought Prompting in LangChain

Here's how Langchain uses CoT in Python:

from langchain.chains import SequentialChain

# Define the chains

chain1 = ...

chain2 = ...

chain3 = ...

chain4 = ...

# Connect the chains

overall_chain = SequentialChain(chains=[chain1, chain2, chain3, chain4], input_variables=["input", "perfect_factors"], output_variables=["ranked_solutions"], verbose=True)

# Execute the overall chain

print(overall_chain({"input": "human colonization of Mars", "perfect_factors": "The distance between Earth and Mars is very large, making regular resupply difficult"}))This code snippet shows how Langchain connects multiple chains using the SequentialChain class. The output of one chain becomes the input to the next, allowing for a complex chain of thought.

Conclusion

Summary of Key Points

We've explored the fascinating world of Chain of Thought Prompting (CoT), a technique that's revolutionizing the way Large Language Models (LLMs) like ChatGPT and Langchain operate. Unlike traditional prompting methods, CoT guides the model through a logical sequence of steps, enhancing its reasoning and problem-solving abilities. This results in more accurate and reliable outputs, especially in tasks that require complex reasoning.

Why CoT is a Game-Changer

CoT is more than just a new technique; it's a game-changer in the field of machine learning and natural language processing. By making models more articulate in their reasoning, CoT opens up new possibilities for applications that require complex problem-solving and logical reasoning. Whether it's arithmetic tasks, commonsense reasoning, or symbolic reasoning, CoT has proven to significantly improve performance metrics.

FAQs

What is the chain of thought prompting in Langchain?

In Langchain, Chain of Thought Prompting is implemented through a Tree of Thoughts (ToT) algorithm that combines LLMs and heuristic search. It allows the platform to handle more complex language tasks by guiding the model through a series of logical steps.

What is the chain of thought strategy?

The chain of thought strategy is a method of guiding a model's reasoning process through a series of logical steps or "thought nodes." Each node serves as a prompt that the model must evaluate before moving on to the next, allowing it to correct itself if it goes off track.

What is an example of a chain of thoughts?

An example of a chain of thoughts would be breaking down a complex math problem into smaller tasks and solving them step-by-step. For instance, if the problem is to find the area of a trapezoid, the chain of thoughts might include calculating the average of the two parallel sides, then finding the height, and finally multiplying them to get the area.