GGML - AI at the Edge

Published on

Ggml Description

GGML, short for "Generalized Graphical Machine Learning," is a tensor library for machine learning that aims to enable the deployment of large and complex AI models on commodity hardware, particularly on edge devices. Developed by a team of researchers and engineers, GGML is designed to address the growing demand for efficient and high-performance AI solutions that can operate in resource-constrained environments.

Ggml Review

GGML is a game-changer in the world of edge AI, offering a unique blend of performance, portability, and flexibility that sets it apart from traditional machine learning frameworks. At its core, GGML is a lightweight and efficient tensor library that can run complex models on a wide range of hardware, from low-power microcontrollers to high-performance GPUs. This versatility is a direct result of the library's focus on optimizing tensor operations and memory management for efficient inference.

One of the standout features of GGML is its ability to deliver impressive performance on commodity hardware. Through its low-level hardware utilization and advanced optimization techniques, GGML can often outperform more heavyweight frameworks like TensorFlow or PyTorch, especially when it comes to running models on edge devices. This performance advantage is particularly crucial in applications where real-time inference and low latency are critical, such as in robotics, autonomous systems, and computer vision.

In addition to its performance capabilities, GGML also shines in terms of portability and scalability. The library is designed to be highly cross-platform, with support for a wide range of hardware architectures, including ARM, x86, and RISC-V. This makes it an attractive choice for developers who need to deploy their AI solutions across a diverse range of edge devices, from embedded systems to mobile platforms.

The flexibility of GGML extends beyond just hardware support. The library offers a range of model loading and deployment options, allowing developers to integrate it seamlessly into their existing workflows and infrastructure. Whether you're working with pre-trained models or need to fine-tune your own custom models, GGML provides the tools and functionality to get the job done efficiently.

One area where GGML stands out is its focus on efficient memory management and low-level hardware optimization. By carefully managing memory usage and leveraging the unique capabilities of different hardware platforms, GGML can often achieve significantly higher performance than other frameworks, especially on resource-constrained edge devices. This attention to detail is a testament to the library's engineering prowess and the deep understanding of the team behind it.

While GGML is primarily designed for inference, the library can also be used for training small to medium-sized models, particularly on edge devices with limited resources. This flexibility expands the range of use cases where GGML can be applied, making it a valuable tool for developers working on a variety of AI-powered applications.

The growing community of GGML users and contributors is another testament to the library's capabilities and potential. As more developers discover the benefits of GGML, the project is gaining traction and attracting a diverse range of contributors, from academic researchers to industry practitioners. This community support, combined with the extensive documentation and resources available, makes GGML an increasingly attractive choice for developers looking to deploy AI at the edge.

Ggml Use Cases

GGML's versatility and performance make it applicable to a wide range of use cases, including:

-

Embedded Systems and IoT Devices: GGML's efficient design and low resource requirements make it an ideal choice for running AI models on low-power embedded systems and IoT devices, enabling intelligent edge computing and real-time decision-making.

-

Mobile and Edge Computing Applications: With its cross-platform support and optimization for mobile and edge hardware, GGML is well-suited for deploying AI-powered applications on smartphones, tablets, and other edge computing devices.

-

Real-time Inference and Decision-making: The library's focus on low-latency inference and efficient memory management make it a compelling choice for applications that require real-time processing and decision-making, such as robotics, autonomous systems, and industrial automation.

-

Computer Vision and Image Processing: GGML's performance and hardware support make it a powerful tool for deploying computer vision and image processing models on edge devices, enabling applications like object detection, image classification, and augmented reality.

-

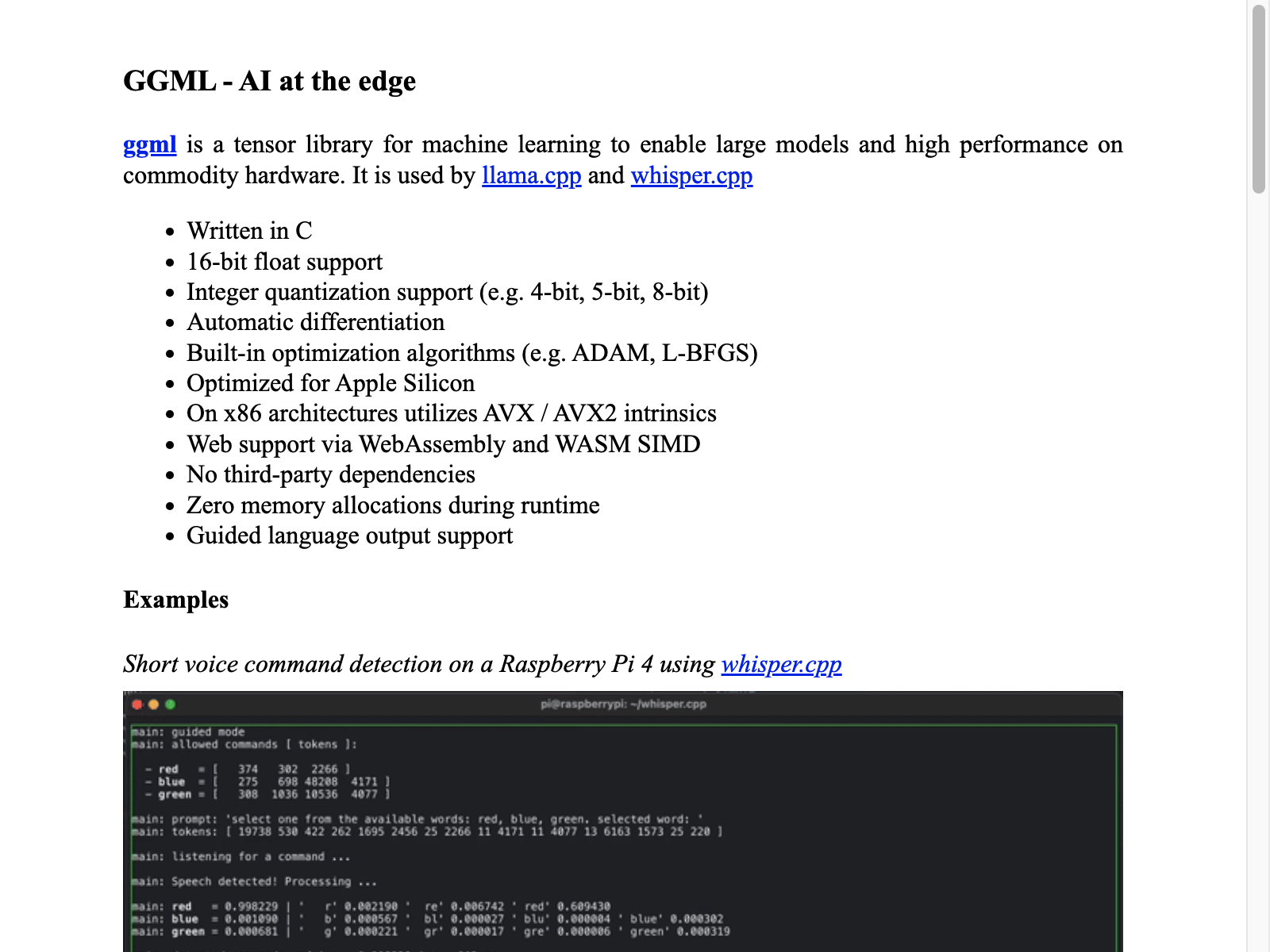

Natural Language Processing and Generation: While not as widely used for NLP tasks as some other frameworks, GGML can still be a viable option for running lightweight language models on edge devices, enabling applications like chatbots, voice assistants, and language translation.

-

Robotics and Autonomous Systems: The combination of GGML's performance, portability, and efficient memory management makes it a compelling choice for running AI models on robotic and autonomous systems, where real-time inference and low power consumption are critical.

Ggml Key Features

-

Efficient Tensor Operations: GGML is designed to optimize tensor operations for high-performance inference, leveraging low-level hardware features and advanced optimization techniques to deliver impressive performance on a wide range of devices.

-

Hardware Platform Support: The library supports a diverse range of hardware architectures, including ARM, x86, and RISC-V, as well as GPU acceleration, allowing developers to deploy their AI models on a variety of edge devices.

-

Optimized Memory Management: GGML's focus on efficient memory management and low-level hardware utilization helps to minimize resource consumption and enable the deployment of larger models on resource-constrained edge devices.

-

Flexible Model Loading and Deployment: GGML provides a range of options for loading and deploying AI models, allowing developers to integrate the library seamlessly into their existing workflows and infrastructure.

-

Extensive Documentation and Community: The GGML project is well-documented, with a growing community of contributors and users who provide support, share best practices, and collaborate on new features and improvements.

Pros and Cons

Pros:

- Excellent performance on commodity hardware, often outperforming more heavyweight frameworks

- Highly portable and scalable across a wide range of hardware platforms and architectures

- Efficient memory management and low-level hardware optimization for resource-constrained environments

- Extensive documentation and a supportive community of contributors

Cons:

- Limited support for training large-scale models, with a primary focus on efficient inference

- May require more manual optimization and configuration compared to some high-level machine learning frameworks

Pricing

GGML is an open-source library, and the core functionality is available free of charge. However, the project also offers commercial support and consulting services for organizations that require more advanced features, customization, or dedicated technical assistance.

FAQs

-

What is the difference between GGML and other machine learning frameworks? GGML is primarily focused on efficient inference on edge devices, while frameworks like TensorFlow or PyTorch are more geared towards training and development of large-scale models. GGML's emphasis is on performance, portability, and resource optimization for deployment on a wide range of edge hardware.

-

Can GGML be used for training models? While GGML is primarily designed for inference, it can also be used for training small to medium-sized models, especially on edge devices with limited resources. However, its primary strength lies in efficient model deployment and inference on edge hardware.

-

What hardware platforms does GGML support? GGML supports a wide range of hardware platforms, including ARM, x86, and RISC-V architectures, as well as GPUs and other specialized hardware. This broad hardware support is a key advantage of the library, allowing developers to deploy their AI models on a diverse range of edge devices.

-

How does GGML compare to other edge AI solutions? GGML stands out for its focus on performance, portability, and efficient memory management, making it a compelling choice for deploying AI models on a variety of edge devices. Compared to other edge AI solutions, GGML's emphasis on low-level hardware optimization and cross-platform support gives it a unique advantage in terms of delivering high-performance inference on resource-constrained edge hardware.

For more information, please visit the GGML website (opens in a new tab).