Guanaco 65B: Open Source Finetuned Chatbots that Challenges GPT-4

Published on

In the ever-evolving landscape of artificial intelligence, text-generation models have become the cornerstone of various applications, from chatbots to content creation. Guanaco 65B stands out as a revolutionary model that has garnered significant attention for its capabilities. But what makes it so special? In this comprehensive guide, we'll delve into the nitty-gritty of Guanaco 65B, exploring its different versions, compatibility features, and even how to get your hands on it.

If you're a developer, data scientist, or just an AI enthusiast, understanding Guanaco 65B could be your next big leap in the field. Whether you're looking to generate text, analyze data, or develop a new application, this guide will serve as your ultimate resource. So, let's get started!

Want to learn the latest LLM News? Check out the latest LLM leaderboard!

What is Guanaco 65B and Why Should You Care?

Guanaco 65B is a state-of-the-art language model designed for text generation. It's not just another model; it's a flexible and powerful tool that comes in various versions to suit different hardware requirements. Whether you're working with a CPU or a high-end GPU, there's a Guanaco 65B for you. Now, let's break down these versions.

Benchmarking Guanaco 65B: How Does It Stack Up?

When it comes to evaluating the performance of language models like Guanaco 65B, benchmarks are crucial. They provide a standardized way to measure various aspects such as speed, accuracy, and overall capabilities. However, benchmarks are not the only way to gauge a model's effectiveness. User experiences and community discussions also offer valuable insights.

Unfortunately, as of now, Guanaco 65B is not listed on any official leaderboards. However, it's worth noting that:

- Fine-Tuning: Guanaco 65B is one of the few fine-tuned models available for the 65B architecture, making it a unique offering.

- Specialized Tasks: The model's compatibility with QLoRA suggests that it might excel in query-based tasks, although this needs to be empirically verified.

Advantages of Guanaco 65B

After using the model, here are the advantages of Guanaco-65B:

- Speed and Efficiency: Some users found the 65B version to be slower, affecting its usability for certain tasks.

- Roleplay and Character Consistency: Users noted that Guanaco models excel in maintaining character consistency, especially when character cards are used.

- Text Generation: Guanaco models are praised for their ability to generate a lot of text while understanding prompts effectively.

Guanaco 65B Licensing

Before diving into the world of Guanaco 65B, it's crucial to understand the licensing terms that come with each version. Different licenses govern how you can use, modify, and distribute the model.

- Open Source Licenses: Some versions come with open-source licenses, allowing for free use and modification.

- Commercial Licenses: Other versions may require a commercial license, especially if you plan to use the model in a for-profit setting.

You can check out the Guanaco 65B Huggingface page here (opens in a new tab).

A Step-by-Step Guide to Using Guanaco 65B

Navigating the world of text-generation models can be daunting, especially if you're new to the field. That's why we've prepared a comprehensive, step-by-step guide to get you up and running with Guanaco 65B.

Step 1. Download Guanaco 65B

Before you can start generating text, you'll need to download the Guanaco 65B model that best suits your needs. Here's how:

- Visit the Hugging Face Repository: Go to the Hugging Face website and search for the Guanaco 65B version you want.

- Select the Model: Click on the model to go to its dedicated page.

- Copy the Model Name: You'll find the model name near the top of the page. Copy it for later use.

Step 2. Text Generation Using Guanaco 65B

Once you've downloaded the model, it's time to write some code. Below is a Python sample code snippet that demonstrates how to generate text using Guanaco 65B.

from transformers import AutoTokenizer, AutoModelForCausalLM

# Initialize the tokenizer and model

tokenizer = AutoTokenizer.from_pretrained("TheBloke/guanaco-65B")

model = AutoModelForCausalLM.from_pretrained("TheBloke/guanaco-65B")

# Prepare the text input

input_text = "Tell me a joke."

input_ids = tokenizer.encode(input_text, return_tensors="pt")

# Generate text

output = model.generate(input_ids)

# Decode and print the generated text

print(tokenizer.decode(output[0], skip_special_tokens=True))To run this code, make sure you've installed the transformers library. If not, you can install it using pip:

pip install transformersAnd there you have it—a simple yet effective way to generate text using Guanaco 65B. Whether you're building a chatbot, a content generator, or any other text-based application, this model has got you covered.

Step 3. Using Guanaco 65B with Google Colab

For those who prefer a more hands-on approach, Guanaco 65B can be easily implemented in Google Colab notebooks. This allows you to experiment with the model without any setup hassles.

- Ease of Use: Simply import the model into a Colab notebook and start using it.

- Collaboration: Colab's sharing features make it easy to collaborate on projects.

Here's a quick code snippet to use Guanaco 65B in Google Colab:

# Sample code for using Guanaco 65B in Google Colab

# Make sure to install the transformers library first

!pip install transformers

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("TheBloke/guanaco-65B")

model = AutoModelForCausalLM.from_pretrained("TheBloke/guanaco-65B")

input_text = "What is AI?"

input_ids = tokenizer.encode(input_text, return_tensors="pt")

output = model.generate(input_ids)

print(tokenizer.decode(output[0], skip_special_tokens=True))Different Versions of Guanaco 65B

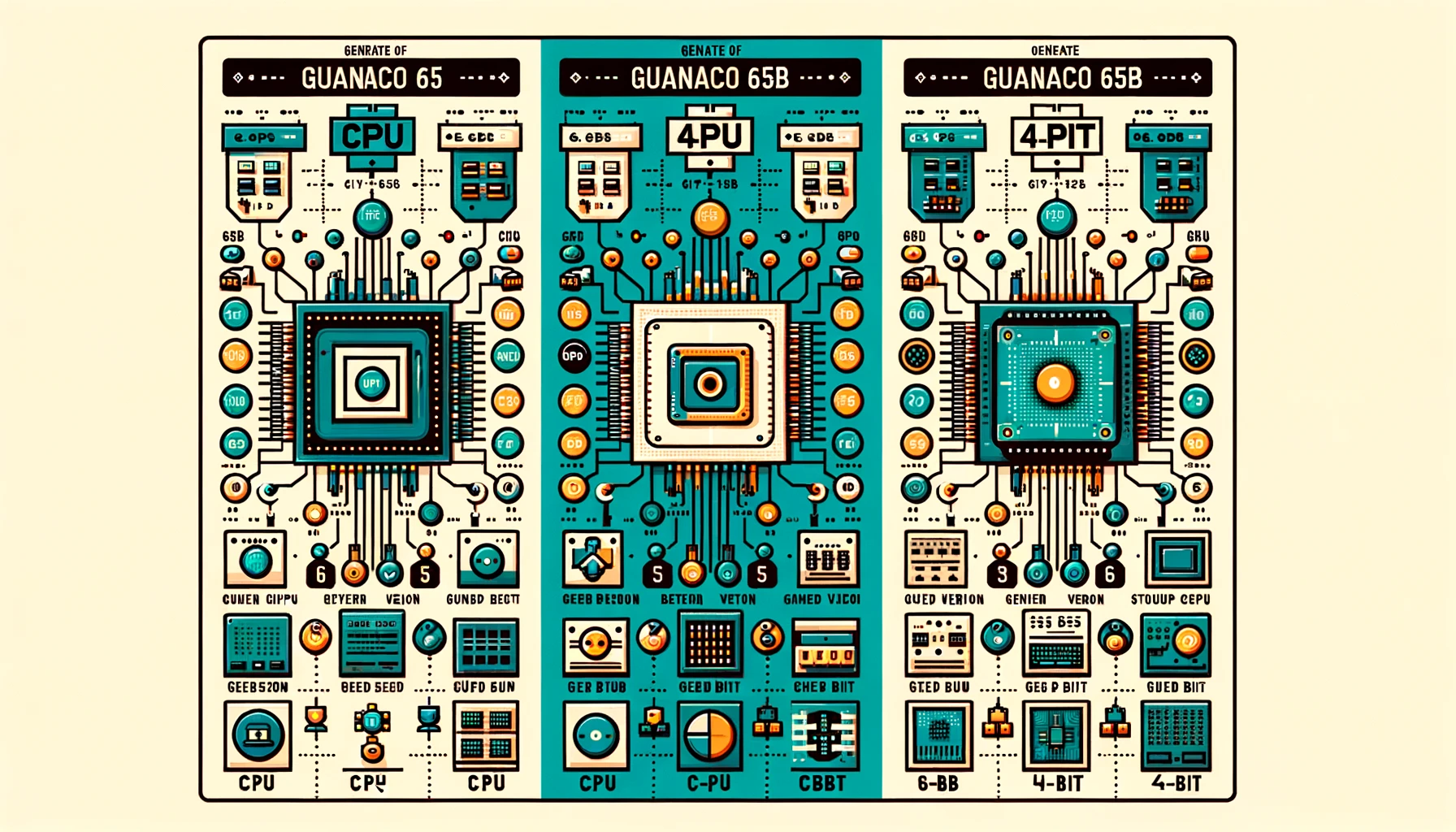

Using the 4-Bit Guanaco 65B Version

When it comes to optimizing for both performance and memory, the 4-bit Guanaco 65B-GPTQ version is a game-changer. This variant is designed to run on GPUs but with a twist—it uses quantization to reduce the model's size without significantly compromising its performance.

Features:

- Reduced Memory Footprint: The 4-bit quantization drastically reduces the VRAM requirements.

- Multiple Quantization Parameters: Allows for customization based on your specific needs.

- Near Original Performance: Despite the reduced size, the model maintains a high level of accuracy.

Who Should Use It?

- Researchers focusing on efficient machine learning

- Developers with limited GPU resources

- Anyone interested in edge computing

Here's a sample code snippet to use Guanaco 65B-GPTQ:

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("TheBloke/guanaco-65B-GPTQ")

model = AutoModelForCausalLM.from_pretrained("TheBloke/guanaco-65B-GPTQ")

input_text = "What's the weather like?"

input_ids = tokenizer.encode(input_text, return_tensors="pt")

output = model.generate(input_ids)

print(tokenizer.decode(output[0], skip_special_tokens=True))By choosing the right version of Guanaco 65B, you can tailor your text-generation tasks to fit your hardware capabilities. Whether it's the CPU, GPU, or the 4-bit version, each offers unique advantages that cater to a wide range of needs.

Use Guanaco 65B with QLoRA

One of the standout features of Guanaco 65B is its compatibility with QLoRA, a query-based language model. This feature allows you to perform more specific and targeted text generation tasks.

- Query-Based Tasks: Ideal for search engines or recommendation systems.

- Enhanced Accuracy: QLoRA compatibility ensures more accurate and relevant text generation.

Here's a sample code snippet to use Guanaco 65B with QLoRA:

# Sample code for using Guanaco 65B with QLoRA

# Note: This is a hypothetical example; actual implementation may vary.

from qlora import QLoRATransformer

qlora_model = QLoRATransformer("TheBloke/guanaco-65B")

query = "What is the capital of France?"

response = qlora_model.generate(query)

print(response)Conclusion: Why Guanaco 65B is a Game-Changer

As we wrap up this comprehensive guide, it's clear that Guanaco 65B is not just another text-generation model. It's a versatile, powerful, and highly customizable tool that caters to a wide range of applications and hardware setups. From its different versions to its compatibility with query-based models like QLoRA, Guanaco 65B is truly a game-changer in the field of text generation.

Whether you're a seasoned developer or a curious enthusiast, this model offers something for everyone. So why wait? Dive into the world of Guanaco 65B and unlock the future of text generation today!

Want to learn the latest LLM News? Check out the latest LLM leaderboard!