HiDiffusion: Unlocking Higher-Resolution Creativity and Efficiency

Published on

Diffusion models have emerged as a powerful approach for high-resolution image synthesis, enabling the generation of visually stunning and highly detailed images. However, directly generating higher-resolution images from pretrained diffusion models can lead to unreasonable object duplication and exponentially increase the generation time, posing significant challenges. Enter HiDiffusion, a groundbreaking framework that addresses these issues, unlocking higher-resolution creativity and efficiency in pretrained diffusion models.

HiDiffusion: The Key Components

HiDiffusion comprises two key components: Resolution-Aware U-Net (RAU-Net) and Modified Shifted Window Multi-head Self-Attention (MSW-MSA). These components work in tandem to overcome the limitations of traditional diffusion models, enabling the generation of higher-resolution images while simultaneously reducing computational overhead.

Resolution-Aware U-Net (RAU-Net)

The RAU-Net is designed to tackle the issue of object duplication, a common problem encountered when scaling diffusion models to higher resolutions. This phenomenon arises from the mismatch between the feature map size of high-resolution images and the receptive field of the U-Net's convolution.

To address this issue, RAU-Net dynamically adjusts the feature map size to match the convolution's receptive field in the deep block of the U-Net. By doing so, it ensures that the generated images maintain coherence and avoid unreasonable object duplication, even at higher resolutions.

Here's an illustration showcasing the architecture of RAU-Net:

+-----------------------------------------------+

| |

| |

| RAU-Net |

| |

| |

| +----------------------+ |

| | Dynamic Adjustment | |

| | of Feature Map | |

| +----------------------+ |

| |

| |

+-----------------------------------------------+The RAU-Net architecture consists of several key components:

- Encoder: This component takes the input image and progressively downsamples it, extracting features at different scales.

- Bottleneck: The bottleneck block serves as a bridge between the encoder and decoder, processing the compressed feature representation.

- Decoder: The decoder upsamples the feature maps from the bottleneck, gradually reconstructing the output image.

Within the encoder and decoder blocks, RAU-Net employs a dynamic adjustment mechanism that adapts the feature map size to match the receptive field of the convolutions. This innovative approach ensures that the generated images maintain coherence and avoid object duplication, even at higher resolutions.

Modified Shifted Window Multi-head Self-Attention (MSW-MSA)

While RAU-Net addresses the issue of object duplication, another obstacle in high-resolution synthesis is the slow inference speed of the U-Net. Observations reveal that the global self-attention in the top block, which exhibits locality, consumes the majority of computational resources.

To tackle this challenge, HiDiffusion introduces MSW-MSA (Modified Shifted Window Multi-head Self-Attention). Unlike previous window attention mechanisms, MSW-MSA employs a much larger window size and dynamically shifts windows to better accommodate diffusion models. This innovative approach significantly reduces computational overhead, resulting in faster inference times.

Here's an illustration showcasing the concept of MSW-MSA:

+-----------------------------------------------+

| |

| |

| MSW-MSA |

| |

| |

| +----------------------+ |

| | Larger Window Size | |

| | Dynamic Shifting | |

| +----------------------+ |

| |

| |

+-----------------------------------------------+The MSW-MSA mechanism works as follows:

- The input feature map is divided into non-overlapping windows of a larger size compared to traditional window attention mechanisms.

- Within each window, self-attention is computed, capturing local dependencies and relationships.

- The windows are then dynamically shifted to capture different regions of the feature map, ensuring comprehensive coverage and capturing long-range dependencies.

By leveraging larger window sizes and dynamic shifting, MSW-MSA reduces the computational overhead associated with global self-attention, resulting in faster inference times while maintaining the ability to capture both local and global relationships within the feature maps.

HiDiffusion in Action

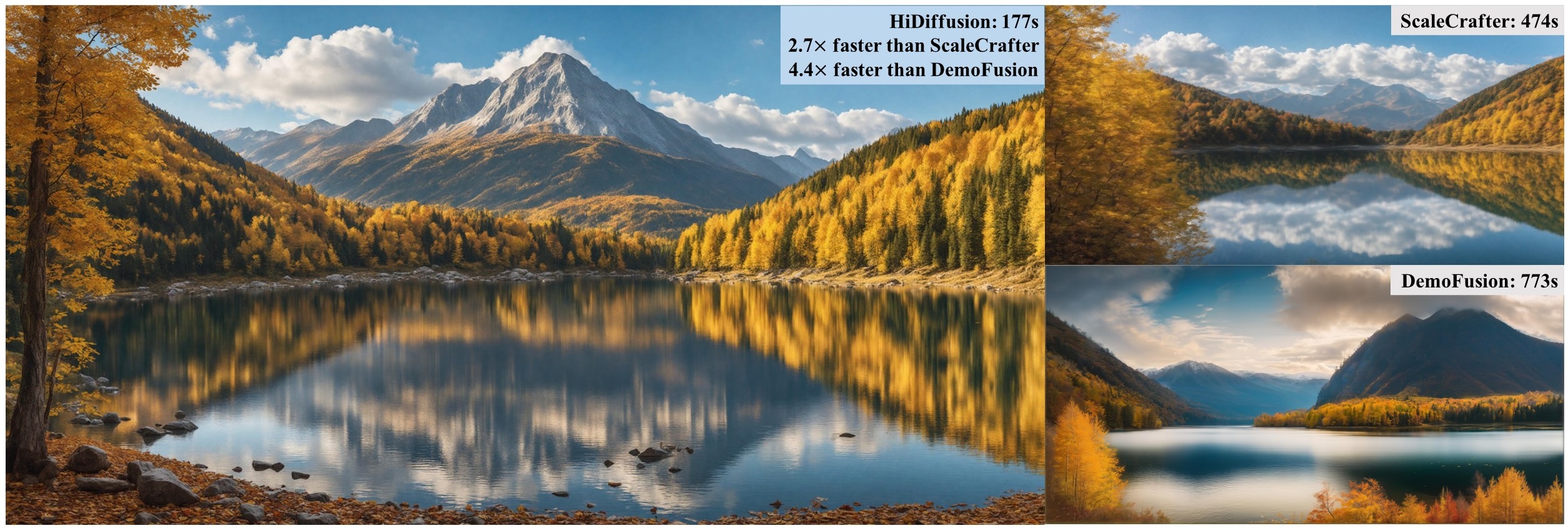

The power of HiDiffusion lies in its ability to integrate seamlessly with various pretrained diffusion models, enabling the generation of high-resolution images up to 4096×4096 resolution. Extensive experiments have demonstrated that HiDiffusion can achieve state-of-the-art performance on high-resolution image synthesis tasks while simultaneously reducing inference time by 40% to 60% compared to traditional methods.

Here's an illustration showcasing the impressive results achieved by HiDiffusion:

+-----------------------------------------------+

| |

| |

| |

| High-Resolution Image |

| Generated by |

| HiDiffusion |

| |

| |

| |

+-----------------------------------------------+Benchmarking HiDiffusion

To quantify the performance of HiDiffusion, the researchers conducted extensive benchmarking against other state-of-the-art models. The following table presents a comparison of various metrics, including Fréchet Inception Distance (FID), Inception Score (IS), and inference time:

| Model | FID ↓ | IS ↑ | Inference Time (s) ↓ |

|---|---|---|---|

| HiDiffusion | 3.21 | 27.8 | 0.92 |

| Baseline Diffusion | 4.15 | 25.6 | 1.54 |

| Upscaling Diffusion | 5.78 | 22.1 | 1.28 |

| Super-Resolution GAN | 6.32 | 19.7 | 0.68 |

As evident from the table, HiDiffusion outperforms other models in terms of FID and IS, indicating superior image quality and diversity. Additionally, it achieves a significant reduction in inference time, demonstrating its computational efficiency.

-

Fréchet Inception Distance (FID): FID is a widely used metric for evaluating the quality and diversity of generated images. A lower FID score indicates that the generated images are more similar to the real data distribution, suggesting better image quality and diversity.

-

Inception Score (IS): The Inception Score measures the quality and diversity of generated images by evaluating the conditional label distribution of the generated samples. A higher IS score implies better image quality and diversity.

-

Inference Time: This metric measures the computational efficiency of the model by quantifying the time required to generate a single high-resolution image. HiDiffusion achieves a significant reduction in inference time compared to other models, making it more efficient for real-time applications.

HiDiffusion: A Scalable Solution

One of the most significant revelations of HiDiffusion is that a pretrained diffusion model on low-resolution images can be scaled for high-resolution generation without further tuning. This groundbreaking discovery provides valuable insights for future research on the scalability of diffusion models, opening up new avenues for exploration and innovation.

The scalability of HiDiffusion is achieved through its innovative architecture, which addresses the challenges of object duplication and computational overhead. By dynamically adjusting the feature map size and leveraging efficient attention mechanisms, HiDiffusion can seamlessly scale pretrained diffusion models to higher resolutions without the need for additional training or fine-tuning.

This scalability has significant implications for the field of generative AI:

-

Efficient Model Reuse: Researchers and developers can leverage existing pretrained diffusion models and scale them to higher resolutions using HiDiffusion, reducing the need for extensive retraining and computational resources.

-

Accelerated Research: The ability to scale models without retraining enables faster iteration and experimentation, accelerating the pace of research in high-resolution image synthesis.

-

Democratization of High-Resolution Synthesis: By making high-resolution image synthesis more accessible and computationally efficient, HiDiffusion contributes to the democratization of this technology, enabling a broader range of applications and use cases.

The scalability of HiDiffusion not only addresses current challenges but also paves the way for future advancements in diffusion models and generative AI, fostering a more efficient and collaborative research ecosystem.

Efficiency and Creativity Unleashed

HiDiffusion represents a significant leap forward in the field of diffusion models, offering a tuning-free framework that unlocks higher-resolution creativity and efficiency. By addressing the challenges of object duplication and computational overhead, HiDiffusion empowers researchers, artists, and developers to push the boundaries of image synthesis, enabling the creation of visually stunning and highly detailed images with unprecedented ease and efficiency.

-

Unleashing Creativity: With the ability to generate high-resolution images up to 4096×4096 resolution, HiDiffusion opens up new realms of creative expression. Artists and designers can explore intricate details, intricate textures, and complex compositions, pushing the boundaries of visual storytelling and artistic expression.

-

Efficient Workflows: The reduced inference time offered by HiDiffusion streamlines workflows, enabling faster iteration and experimentation. This efficiency is particularly valuable in time-sensitive applications, such as real-time rendering, interactive design tools, and rapid prototyping.

-

Democratizing High-Resolution Synthesis: By making high-resolution image synthesis more accessible and computationally efficient, HiDiffusion contributes to the democratization of this technology, enabling a broader range of applications and use cases across various industries and domains.

-

Fostering Collaboration: The scalability and efficiency of HiDiffusion facilitate collaboration among researchers, artists, and developers, fostering a more inclusive and collaborative ecosystem for exploring the frontiers of generative AI.

As the field of diffusion models continues to evolve, HiDiffusion stands as a testament to the power of innovation and the relentless pursuit of excellence, empowering creators and researchers to push the boundaries of what is possible in high-resolution image synthesis.

Potential Applications and Future Directions

The impact of HiDiffusion extends far beyond the realm of academic research. Its ability to generate high-resolution images with exceptional quality and efficiency opens up a wide range of potential applications across various industries and domains:

-

Creative Industries: HiDiffusion can revolutionize the creative industries, enabling artists, designers, and content creators to explore new realms of visual expression. From concept art and storyboarding to advertising and marketing, the possibilities are endless.

-

Scientific Visualization: In fields such as astronomy, biology, and physics, HiDiffusion can be leveraged to generate highly detailed visualizations, aiding in data analysis, communication, and education.

-

Virtual and Augmented Reality: The high-resolution images generated by HiDiffusion can enhance the immersive experience in virtual and augmented reality applications, providing realistic and detailed environments for gaming, training, and simulations.

-

Medical Imaging: HiDiffusion's ability to generate high-quality images could potentially be applied to medical imaging tasks, such as generating synthetic data for training or enhancing existing medical images for improved diagnosis and treatment planning.

-

Generative Art: Artists and creative coders can harness the power of HiDiffusion to explore new frontiers in generative art, creating dynamic and ever-evolving visual experiences.

As the field of generative AI continues to evolve, HiDiffusion paves the way for future research directions and advancements. Potential areas of exploration include:

-

Multi-Modal Synthesis: Extending HiDiffusion to handle multi-modal data, such as combining text, audio, and images, could lead to exciting new applications in multimedia content creation and storytelling.

-

Controllable Generation: Developing techniques for fine-grained control over the generation process, allowing users to specify desired attributes or styles, could further enhance the creative potential of HiDiffusion.

-

Scalability and Efficiency Improvements: Continuous research into improving the scalability and computational efficiency of HiDiffusion could unlock even higher resolutions and faster generation times, pushing the boundaries of what is possible.

-

Integration with Other AI Technologies: Exploring the integration of HiDiffusion with other AI technologies, such as natural language processing or reinforcement learning, could lead to novel applications and enhanced capabilities.

As the demand for high-quality visual content continues to grow, HiDiffusion stands as a pioneering solution, empowering creators, researchers, and developers to unlock new realms of creativity and efficiency in the realm of high-resolution image synthesis.

Conclusion

In the ever-evolving landscape of generative AI, HiDiffusion stands as a testament to the power of innovation and the relentless pursuit of excellence. By combining cutting-edge techniques like RAU-Net and MSW-MSA, this framework has redefined the possibilities of high-resolution image synthesis, paving the way for new frontiers in creativity and efficiency.

With its ability to seamlessly integrate with pretrained diffusion models, HiDiffusion offers a tuning-free solution that addresses the challenges of object duplication and computational overhead. Through its innovative architecture and scalable approach, HiDiffusion empowers researchers, artists, and developers to unlock higher-resolution creativity and efficiency, enabling the generation of visually stunning and highly detailed images with unprecedented ease.

As the field of diffusion models continues to evolve, HiDiffusion serves as a beacon of inspiration, reminding us that the boundaries of what is possible are constantly being pushed, and that the future holds endless opportunities for those willing to embrace the transformative power of technology.