Understanding Few-Shot Prompting in Prompt Engineering

Published on

Introduction to Few-Shot Prompting

Welcome to the fascinating world of Few-Shot Prompting in Prompt Engineering! If you've been scratching your head, wondering how to make your language models perform specific tasks with minimal examples, you're in the right place. This article aims to be your go-to resource for understanding the ins and outs of few-shot prompting.

In the next sections, we'll delve into the mechanics, types, and practical examples of few-shot prompting. By the end of this read, you'll not only grasp the concept but also know how to implement it effectively. So, let's get started!

How does Few-Shot Prompting Work

What is Few-Shot Prompting?

Few-shot prompting is a technique where you provide a machine learning model, particularly a language model, with a small set of examples to guide its behavior for a specific task. Unlike traditional machine learning methods that require extensive training data, few-shot prompting allows the model to understand the task with just a handful of examples. This is incredibly useful when you have limited data or need quick results.

Key Components:

- Prompt Template: This is the structure that holds your examples. It's usually a string where variables can be inserted.

- Examples: These are the actual data points that guide the model. Each example is a dictionary with keys as input variables and values as the corresponding data.

Examples of Few-Shot Prompting

Now that we've covered the mechanics and types of few-shot prompting, let's dive into some practical examples. These will give you a clearer picture of how this technique can be applied in various scenarios.

Muhammad Ali vs Alan Turing: Who Lived Longer?

In this example, we'll use few-shot prompting with intermediate steps to determine who lived longer: Muhammad Ali or Alan Turing.

Steps to Follow:

-

Define the Task: The end goal is to find out who lived longer between Muhammad Ali and Alan Turing.

-

Identify Intermediate Steps: The intermediate steps involve finding the ages of Muhammad Ali and Alan Turing at the time of their death.

intermediate_steps = [ "Find the age of Muhammad Ali when he died.", "Find the age of Alan Turing when he died." ] -

Run the Model: Feed these intermediate steps as few-shot examples to the model.

examples = [ { "question": "How old was Muhammad Ali when he died?", "answer": "74" }, { "question": "How old was Alan Turing when he died?", "answer": "41" } ] -

Analyze the Results: Based on the answers, we can conclude that Muhammad Ali lived longer than Alan Turing.

Maternal Grandfather of George Washington

Let's say you're curious about the maternal grandfather of George Washington. This is a perfect task for few-shot prompting with intermediate steps.

Steps to Follow:

-

Define the Task: The end goal is to identify the maternal grandfather of George Washington.

-

Identify Intermediate Steps: First, find out who George Washington's mother was, and then identify her father.

intermediate_steps = [ "Identify the mother of George Washington.", "Find out who her father was." ] -

Run the Model: Provide these steps as few-shot examples.

examples = [ { "question": "Who was George Washington's mother?", "answer": "Mary Ball Washington" }, { "question": "Who was the father of Mary Ball Washington?", "answer": "Joseph Ball" } ] -

Analyze the Results: Based on the model's answers, we can conclude that the maternal grandfather of George Washington was Joseph Ball.

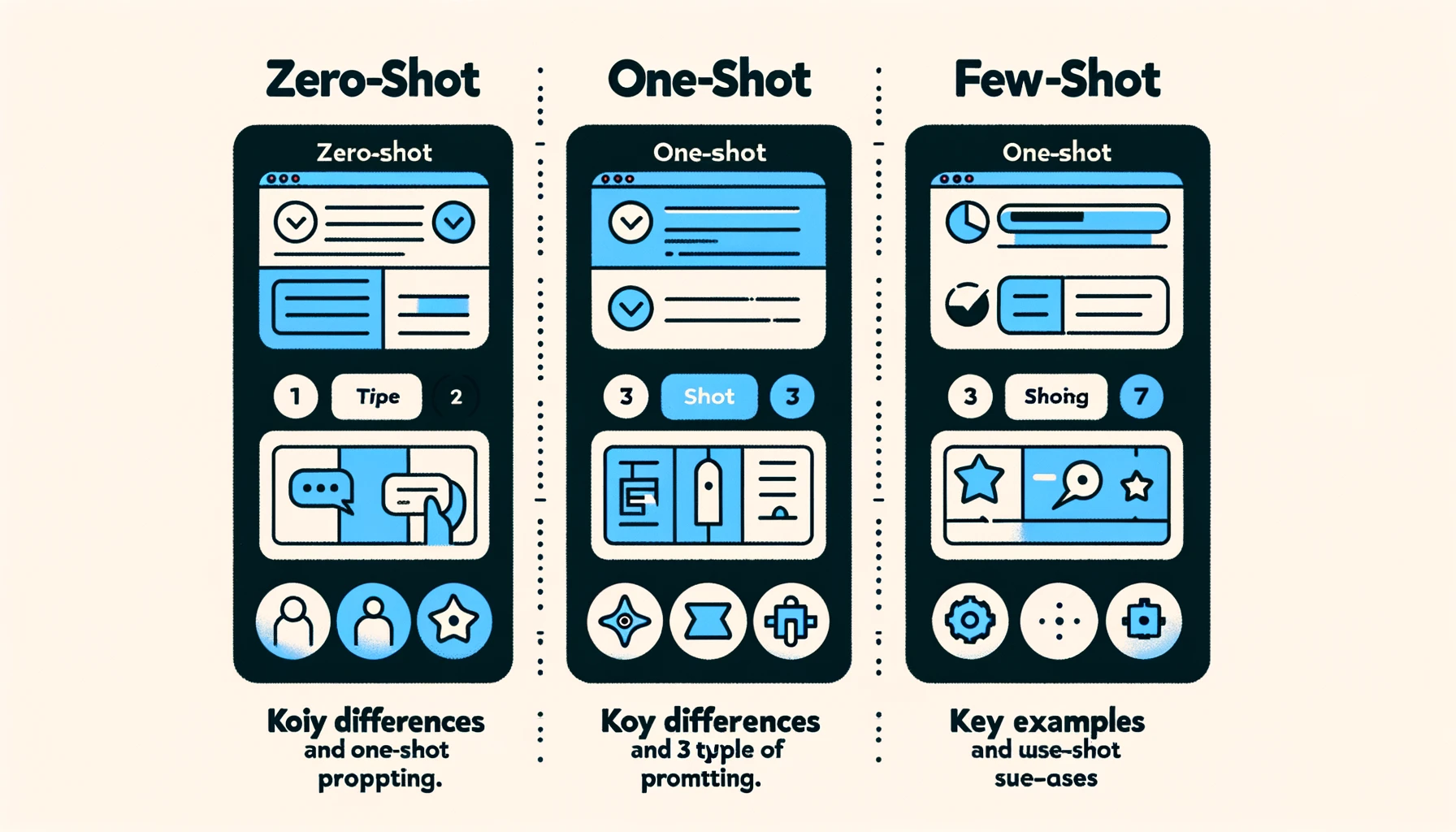

Zero-Shot vs Few-Shot Prompting

Zero-shot prompting is when you ask the model to perform a task without providing any examples. The model relies solely on the instructions given in the prompt. For instance, if you ask the model to classify a text into positive, neutral, or negative sentiment, it will do so based solely on the question.

Few-shot prompting, on the other hand, involves providing a set of examples to guide the model's behavior. These examples act as a roadmap, helping the model better understand the task at hand.

**When to Use Zero-Shot and When to Opt for Few-Shot? **

-

Zero-Shot Prompting: Ideal for straightforward tasks that don't require contextual understanding. For example, simple queries like "What is the capital of France?" can be answered effectively using zero-shot prompting.

-

Few-Shot Prompting: Best suited for complex tasks that require a deeper level of understanding or involve multiple steps. For instance, if you're trying to find out who lived longer between two historical figures, few-shot prompting with intermediate steps would be the way to go.

How to Set Up Few-Shot Prompts

-

Create a List of Examples: The first step is to create a list of examples that the model will use for guidance. Each example should be a dictionary. For instance:

examples = [ { "question": "What is the capital of France?", "answer": "Paris" }, { "question": "What is 2 + 2?", "answer": "4" } ] -

Format the Examples: The next step is to format these examples into a string that the model can understand. This is where the PromptTemplate object comes in. Here's how you can do it:

from some_library import PromptTemplate example_prompt = PromptTemplate(input_variables=["question", "answer"], template="Question: {question}\nAnswer: {answer}") -

Feed Examples to the Model: Finally, you feed these formatted examples to the model along with the task you want it to perform. This is usually done by appending the examples to the actual prompt.

prompt = "Question: What is the capital of Germany?" formatted_examples = example_prompt.format(examples) final_prompt = formatted_examples + prompt

By following these steps, you've essentially set up a few-shot prompt. The model will now use the examples to better understand and answer the question in the final prompt.

Few-Shot Prompting: Self-Ask with Search

One of the most intriguing types of few-shot prompting is the "Self-Ask with Search" method. In this approach, the model is configured to ask itself questions based on the few-shot examples provided. This is particularly useful when you're dealing with complex queries that require the model to sift through a lot of information.

How it Works:

-

Configure the Model: First, you need to set up your model to be able to ask itself questions. This usually involves some initial setup where you define the types of questions the model can ask.

self_ask_config = { "types_of_questions": ["definition", "example", "explanation"] } -

Provide Few-Shot Examples: Next, you provide the model with a set of few-shot examples that guide its questioning process.

examples = [ { "self_ask_type": "definition", "question": "What is photosynthesis?", "answer": "The process by which green plants convert sunlight into food." }, { "self_ask_type": "example", "question": "Give an example of a renewable resource.", "answer": "Solar energy" } ] -

Run the Model: Finally, you run the model with these configurations and examples. The model will now ask itself questions based on the examples and types defined, helping it to better understand the task at hand.

Few-Shot Prompting with Intermediate Steps

Another powerful type of few-shot prompting involves using intermediate steps to arrive at a final answer. This is especially useful for tasks that require logical reasoning or multiple steps to solve.

How it Works:

-

Define the Task: Clearly define what the end goal or final answer should be. For example, if you're trying to find out who lived longer between two individuals, your end goal is to identify that person.

-

Identify Intermediate Steps: List down the steps or questions that need to be answered to reach the final conclusion. For instance, you might need to first find out the ages of the two individuals at the time of their death.

intermediate_steps = [ "Find the age of Muhammad Ali when he died.", "Find the age of Alan Turing when he died." ] -

Run the Model: Feed these intermediate steps as few-shot examples to the model. The model will then use these steps to logically arrive at the final answer.

By understanding and implementing these types of few-shot prompting, you can tackle a wide range of complex tasks with your language model. Whether it's answering intricate questions or solving multi-step problems, few-shot prompting offers a robust and flexible solution.

Frequently Asked Questions (FAQ)

What is zero-shot prompting vs few-shot prompting?

Zero-shot prompting involves asking the model to perform tasks without any prior examples. Few-shot prompting, on the other hand, provides the model with a set of examples to guide its behavior.

What is an example of few shots learning?

An example of few-shot learning would be teaching a model to identify different breeds of dogs by providing it with just a couple of examples for each breed.

What is one-shot or few-shot learning in prompt engineering?

One-shot learning involves providing a single example to guide the model's behavior, while few-shot learning involves multiple examples. Both techniques fall under the umbrella of prompt engineering, which is the practice of crafting effective prompts for machine learning models.

What are three types of prompting?

The three main types of prompting are zero-shot prompting, one-shot prompting, and few-shot prompting. Each has its own set of advantages and disadvantages, and the choice between them depends on the specific task at hand.

Conclusion

Few-shot prompting is a powerful and versatile technique in prompt engineering. Whether you're dealing with simple queries or complex multi-step tasks, few-shot prompting offers a robust solution. By understanding its mechanics, types, and practical applications, you can unlock a whole new level of capability with your language models.

Stay tuned for more insightful articles on prompt engineering and machine learning. Happy prompting!