Mistral AI Unveils Groundbreaking 8x22B Moe Model: A New Era in Open-Source AI

Published on

Introduction to Mistral 8x22B

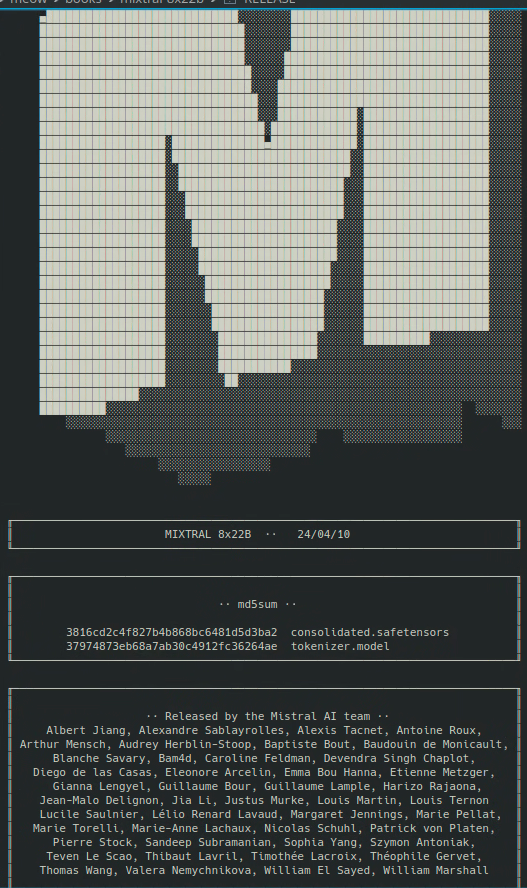

In a stunning development that has sent shockwaves through the AI community, Mistral AI has just announced the release of their highly anticipated 8x22B Moe model. This groundbreaking achievement marks a significant milestone in the world of open-source artificial intelligence, as it promises to deliver unparalleled performance and capabilities that were previously thought to be the exclusive domain of proprietary models.

The Rise of Open-Source AI

The field of artificial intelligence has been undergoing a rapid transformation in recent years, with the emergence of powerful open-source models that have challenged the dominance of proprietary systems. This shift has been driven by a growing recognition of the importance of collaboration and transparency in the development of AI technologies, as well as a desire to democratize access to these powerful tools.

Mistral AI has been at the forefront of this movement, with a track record of delivering cutting-edge models that have consistently pushed the boundaries of what is possible with open-source AI. Their previous releases, such as the highly acclaimed Mixtral 8x7b model, have garnered widespread praise from researchers and developers alike, and have set the stage for even greater breakthroughs in the future.

The 8x22B Moe Model: A Quantum Leap Forward

The 8x22B Moe model represents a quantum leap forward in the capabilities of open-source AI. With a staggering 130 billion parameters and an estimated 44 billion active parameters per forward pass, this model is truly in a league of its own. To put this in perspective, the 8x22B Moe model is more than twice the size of the largest open-source model previously available, and rivals the performance of some of the most advanced proprietary models in existence.

Download the Mistral 8x22b Magnet link here:

magnet:?xt=urn:btih:9238b09245d0d8cd915be09927769d5f7584c1c9&dn=mixtral-8x22b&tr=udp%3A%2F%http://2Fopen.demonii.com%3A1337%2Fannounce&tr=http%3A%2F%http://2Ftracker.opentrackr.org%3A1337%2Fannounce (opens in a new tab)

Key Features and Capabilities

So what exactly sets the 8x22B Moe model apart from its predecessors? Here are just a few of the key features and capabilities that make this release so exciting:

-

Unparalleled Language Understanding: The 8x22B Moe model has been trained on an enormous corpus of text data, allowing it to develop a deep understanding of natural language that surpasses anything we have seen before in an open-source model. This means that the model is capable of engaging in more nuanced and contextually aware conversations, and can handle a wider range of tasks and applications than ever before.

-

Enhanced Reasoning and Problem-Solving: In addition to its language understanding capabilities, the 8x22B Moe model has also been designed to excel at reasoning and problem-solving tasks. This means that it can tackle complex challenges that require logical thinking and inference, such as answering questions based on a given set of facts or generating creative solutions to open-ended problems.

-

Improved Efficiency and Scalability: Despite its massive size, the 8x22B Moe model has been optimized for efficiency and scalability, thanks to a range of technical innovations developed by the Mistral AI team. This means that the model can be deployed and run on a wider range of hardware configurations than previous models, making it more accessible to researchers and developers around the world.

Implications for the Future of AI

The release of the 8x22B Moe model is not just a technical achievement, but also a powerful statement about the future of artificial intelligence. By demonstrating that open-source models can match or even exceed the performance of proprietary systems, Mistral AI is helping to level the playing field and ensure that the benefits of AI are accessible to everyone.

This has profound implications for a wide range of fields and industries, from healthcare and education to finance and transportation. With the 8x22B Moe model and other open-source tools at their disposal, researchers and developers will be able to tackle some of the most pressing challenges facing our world today, and to create new applications and services that were previously unimaginable.

Conclusion

The launch of the 8x22B Moe model by Mistral AI is a watershed moment in the history of artificial intelligence. By pushing the boundaries of what is possible with open-source AI, this release is set to accelerate the pace of innovation and discovery in the field, and to unlock new opportunities for researchers, developers, and businesses around the world.

As we look to the future, it is clear that open-source AI will play an increasingly important role in shaping the course of technological progress. With tools like the 8x22B Moe model at our disposal, we have the power to create a more intelligent, more connected, and more equitable world – one in which the benefits of AI are shared by all.

So let us celebrate this momentous achievement, and let us redouble our efforts to build a future in which artificial intelligence serves the greater good. Together, we can harness the power of open-source AI to solve the greatest challenges of our time, and to create a brighter, more hopeful tomorrow for all of humanity.

Want to learn the latest LLM News? Check out the latest LLM leaderboard!