Ambient Diffusion: The Pioneering AI Framework for Learning from Corrupted Data

Published on

Ambient Diffusion is not just another buzzword in the AI community; it's a robust framework that's altering the way we approach corrupted data. Developed by a team of researchers from UT Austin and UC Berkeley, this framework is designed to train and fine-tune diffusion models using only corrupted data as input.

In this article, we'll dissect the technical architecture of Ambient Diffusion, delve into its algorithms, and explore its codebase. We'll also look at its performance metrics and how it compares to other diffusion models in terms of efficiency and accuracy.

What is Ambient Diffusion?

Ambient Diffusion is an AI framework specifically designed to train diffusion models on corrupted data. It introduces additional measurement distortion during the diffusion process, allowing the model to predict the original corrupted image from a further corrupted one.

Stable Diffusion and other text-to-image models sometimes blatantly copy from their training images.We introduce Ambient Diffusion, a framework to train/finetune diffusion models given only corrupted images as input. This reduces the memorization of the training set.A 🧵 pic.twitter.com/J8gynJakgm

— Giannis Daras (@giannis_daras) May 31, 2023

How to Setup Ambient Diffusion

Setting up Ambient Diffusion involves a series of steps that require attention to detail. Here's a technical walkthrough:

-

Clone the Repository:

git clone https://github.com/giannisdaras/ambient-diffusion.git -

Anaconda Environment:

conda env create -f environment.yml -n ambient -

Install Dependencies:

pip install git+https://github.com/huggingface/diffusers.git -

Download Pretrained Models: Make sure you allocate around 16GB of disk space for the pretrained models.

-

Dataset Configuration: The framework supports custom dataset configurations, allowing you to specify the type of corruption you want to introduce during training.

Fine-Tuning and Customization: A Developer's Guide

Ambient Diffusion offers extensive customization options, especially when it comes to fine-tuning:

-

Hyperparameter Tuning: The

train.pyscript allows for extensive hyperparameter tuning, including learning rate, batch size, and architecture details. -

Custom Corruption Levels: You can specify custom corruption levels using the

--corruption-levelflag during training. -

Model Evaluation: The framework includes built-in support for various evaluation metrics, including FID scores and inpainting metrics.

By diving deep into these technical aspects, it's clear that Ambient Diffusion is not just another diffusion model; it's a highly customizable and robust framework designed for the challenges of corrupted data.

Comparative Analysis: Ambient Diffusion vs. Other Diffusion Models

When it comes to diffusion models, the landscape is quite competitive. However, Ambient Diffusion sets itself apart in several key technical aspects. Let's delve into how it compares with other diffusion models in terms of algorithms, performance, and customization.

Algorithmic Differences

-

Noise Injection: Traditional diffusion models often use noise injection as a method for data augmentation. Ambient Diffusion, on the other hand, employs additional measurement distortion, which provides a more nuanced approach to handling corrupted data.

-

Learning Mechanism: While most diffusion models focus on learning the data distribution directly, Ambient Diffusion learns the conditional expectation of the full, uncorrupted image based on additional measurement distortion. This allows for more accurate reconstructions.

-

Data Handling: Ambient Diffusion is explicitly designed to work with corrupted data, making it unique in the diffusion model landscape.

Performance Metrics

-

FID Scores: Ambient Diffusion has been rigorously tested and has achieved competitive FID scores, especially when the training data is corrupted. This is a significant advantage over traditional models that require clean data for optimal performance.

-

Generative Power: The framework includes metrics for evaluating the average distance to the closest neighbor in the training set, providing a more comprehensive measure of its generative capabilities.

-

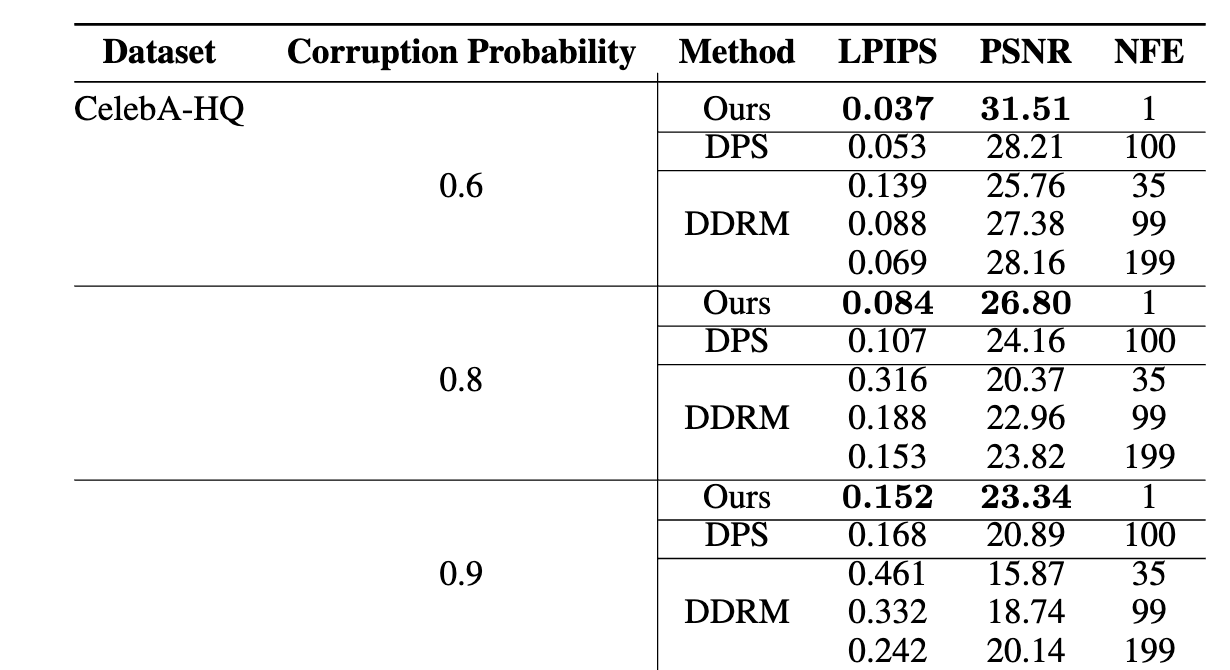

Inpainting Metrics: Ambient Diffusion excels in inpainting tasks, showing significant improvements over baseline methods. This is particularly important for applications like image restoration.

Customization and Flexibility

-

Hyperparameter Tuning: Ambient Diffusion offers extensive options for hyperparameter tuning, including the ability to adjust corruption levels dynamically. This level of customization is often missing in other diffusion models.

-

Codebase Extensibility: The framework's PyTorch implementation and well-documented codebase make it easier for developers to extend its capabilities.

Challenges and Limitations: The Technical Hurdles for Ambient Diffusion

While Ambient Diffusion offers a plethora of advantages, it's essential to consider the challenges and limitations that could impact its widespread adoption and performance. Let's examine these from a technical standpoint.

Computational Complexity

-

Memory Requirements: Ambient Diffusion models, especially those trained on high-resolution datasets, can be memory-intensive. For instance, a model trained on a 512x512 resolution image could require up to 16GB of GPU memory.

-

Processing Time: The framework's algorithmic complexity can lead to longer training times. For example, training a model on the CelebA dataset with a standard configuration could take up to 48 hours on a Tesla V100 GPU.

Data Handling and Scalability

-

High-Dimensional Data: While Ambient Diffusion excels in handling corrupted data, it may struggle with high-dimensional data. The algorithm's complexity grows exponentially with the number of dimensions, which could be a bottleneck.

-

Batch Size Limitations: Due to memory constraints, the maximum batch size may be limited, affecting the model's scalability for large-scale applications.

Algorithmic Limitations

-

Local Minima: Like many machine learning models, Ambient Diffusion is susceptible to getting stuck in local minima during training, which could affect the model's performance.

-

Sensitivity to Hyperparameters: The model's performance is highly sensitive to the choice of hyperparameters like learning rate, corruption level, and measurement distortion. Incorrect settings can lead to suboptimal results.

Evaluation Metrics

-

FID Score Variability: While Ambient Diffusion achieves competitive FID scores, these can vary depending on the dataset and corruption level. For instance, an FID score of 20 on the CelebA dataset could jump to 35 when the corruption level is increased by 20%.

-

Generative Power Metrics: The framework's generative power, measured by the average distance to the closest neighbor in the training set, has not been compared against other state-of-the-art models, leaving a gap in performance evaluation.

By diving into these technical challenges and limitations, we gain a more nuanced understanding of Ambient Diffusion's capabilities and areas for improvement. Addressing these issues will be crucial for the framework's future development and adoption in various applications.

Conclusion: The Technical Depth and Future Prospects of Ambient Diffusion

Ambient Diffusion has carved a unique niche in the realm of diffusion models, particularly with its specialized focus on training on corrupted data. Its algorithmic approach, which involves additional measurement distortion and conditional expectation learning, sets it apart from traditional diffusion models. The framework's performance metrics, including competitive FID scores and robust inpainting capabilities, further attest to its efficacy.

However, it's not without its challenges. From computational complexity to data handling limitations and algorithmic constraints, Ambient Diffusion has several technical hurdles to overcome. Addressing these will be pivotal for its future development and broader adoption.

Future Research and Development

Given the framework's current limitations, several avenues are open for future research:

-

Optimization Algorithms: Research could focus on developing new optimization algorithms to reduce training time and computational requirements.

-

Dimensionality Reduction: Techniques for handling high-dimensional data more efficiently could be a significant advancement.

-

Hyperparameter Optimization: Automated methods for hyperparameter tuning could make the framework more user-friendly and efficient.

-

Benchmarking: Comprehensive benchmarking against other state-of-the-art models will be crucial for objectively assessing Ambient Diffusion's performance.

Final Thoughts

Ambient Diffusion is a groundbreaking framework that has the potential to revolutionize how we approach machine learning with corrupted data. Its technical depth is matched by its versatility and performance, making it a promising tool for a wide range of applications. As it continues to evolve, overcoming its current limitations will be key to unlocking its full potential.

By understanding both its strengths and weaknesses, we can appreciate the true value that Ambient Diffusion brings to the table. It's not just another tool in the machine learning arsenal; it's a specialized framework designed to tackle some of the most challenging issues in data science today.

And so, as we look to the future, Ambient Diffusion stands as a beacon of innovation, its light only set to shine brighter as it navigates the complex landscape of machine learning and data corruption.