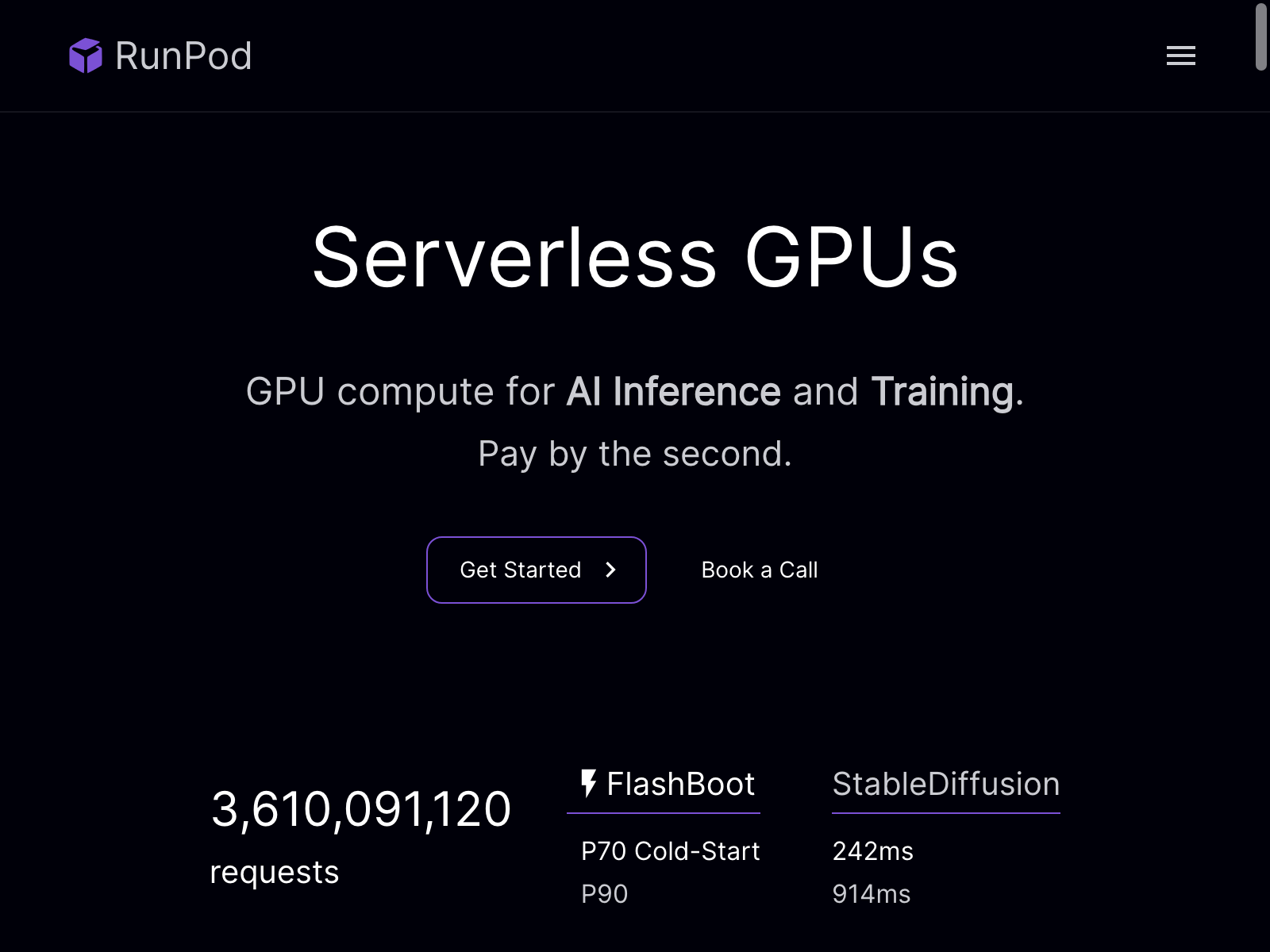

Runpod Serverless is an advanced AI tool that offers serverless GPUs for AI inference and training. With this powerful tool, users can deploy their machine learning models to production without the hassle of worrying about infrastructure or scalability. The serverless GPU capability of Runpod Serverless allows users to leverage powerful GPU resources for AI inference and training, eliminating the need for dedicated hardware.

Published on

One of the standout features of Runpod Serverless is its user-friendly interface, which makes it easy to upload and deploy machine learning models. The tool supports popular frameworks such as TensorFlow and PyTorch, making it compatible with a wide range of AI projects. Whether you are a data scientist, researcher, or developer, Runpod Serverless offers a streamlined experience for deploying and scaling AI models.

When it comes to use cases, Runpod Serverless is ideal for AI inference tasks such as image recognition, natural language processing, and recommendation systems. The serverless GPU capability ensures efficient and fast execution of inference tasks, delivering real-time results. Additionally, Runpod Serverless provides a scalable solution for training machine learning models. With powerful serverless GPU resources, users can significantly reduce training time and experiment with larger datasets, leading to more accurate models.

Another notable feature of Runpod Serverless is its easy deployment process. The user-friendly interface allows for quick and seamless uploading and serving of ML models. This enables integration into existing applications or APIs, making it suitable for deploying AI models in production environments.

In terms of pricing, Runpod Serverless offers a pay-as-you-go model, eliminating the need for dedicated hardware and reducing infrastructure costs. The tool also provides automatic scaling of GPU resources based on demand, ensuring optimal performance for AI workloads regardless of their size or complexity.

As with any tool, there are pros and cons to consider. The pros of Runpod Serverless include hassle-free access to serverless GPU resources, support for popular ML frameworks, seamless deployment and integration, scalability for handling large workloads, and cost-effectiveness with pay-as-you-go pricing. On the flip side, Runpod Serverless is limited to GPU-based AI tasks and has a dependency on cloud-based infrastructure.

In terms of pricing, detailed information can be found on the Runpod Serverless pricing page on their official website. There is also a free trial available with limited resources for users to experience the capabilities of the tool.

To conclude, Runpod Serverless is a powerful AI tool that offers serverless GPUs for AI inference and training. With its user-friendly interface, compatibility with popular ML frameworks, and scalability, it provides a seamless solution for deploying and scaling ML models. Whether you are involved in AI inference, training, or model deployment, Runpod Serverless offers the necessary tools and resources to optimize your AI projects.